Rendering in VR using OpenGL instancing

21/May 2016

TL;DR; download code sample from GitHub!

In all of my VR applications thus far, I’ve been using separate eye buffers for rendering, seeing it as a convenience. Recently, however, I started wondering how I could improve drawing times and reduce unnecessary overhead, so my attention turned toward single render target solution and how it could take advantage of instanced rendering. Here’s a short summary of my results.

To briefly recap, there are two distinct ways you can use to render to the HMD (in this particular case I’ll be focusing on Oculus Rift):

1. Create two render targets (one per eye) and draw the scene to each one of them accordingly.

2. Create a single, large render target and use proper viewports to draw each eye to it.

The details on how both of these can be achieved are not specified, so it’s up to the programmer to figure out how to get both images. Usually, the first idea that comes to mind is to simply recalculate MVP matrix for each eye every frame and render the scene twice, which may look like this in C++ pseudocode:

for (int eyeIndex = 0; eyeIndex < ovrEye_Count; eyeIndex++)

{

// recalculate ModelViewProjection matrix for current eye

OVR::Matrix4f MVPMatrix = g_oculusVR.OnEyeRender(eyeIndex);

// setup scene's shaders and positions using MVPMatrix

// setup of HMD viewports and buffers goes here

(...)

// final image ends up in correct viewport/render buffer of the HMD

glDrawArrays(GL_TRIANGLE_STRIP, 0, num_verts);

}

This works fine but what we’re essentially doing is doubling the amount of draw calls due to rendering everything twice. With modern GPUs this may not necessarily be that big of a deal, however the CPU <-> GPU communication quickly becomes the bottleneck as the scene complexity goes up. During my tests, trying to render a scene with 2500 quads and no culling resulted in drastic framerate drop and GPU rendering time increase. With Oculus SDK 1.3 this can, in fact, go unnoticed due to asynchronous timewarp but we don’t want to deal with performance losses! This is where instancing can play a big role in gaining significant boost.

In a nutshell, with instancing we can render multiple instances (hence the name) of the same geometry with only single draw call. What this means is we can draw the entire scene multiple times as if we were doing it only once (not entirely true but for our purposes we can assume it works that way). So the amount of draw calls is reduced by half in our case and we end up with code that may look like this:

// MVP matrices for left and right eye

GLfloat mvps[32];

// fetch location of MVP UBO in shader

GLuint mvpBinding = 0;

GLint blockIdx = glGetUniformBlockIndex(shader_id, "EyeMVPs");

glUniformBlockBinding(shader_id, blockIdx, mvpBinding);

// fetch MVP matrices for both eyes

for (int i = 0; i < 2; i++)

{

OVR::Matrix4f MVPMatrix = g_oculusVR.OnEyeRender(i);

memcpy(&mvps[i * 16], &MVPMatrix.Transposed().M[0][0], sizeof(GLfloat) * 16);

}

// update MVP UBO with new eye matrices

glBindBuffer(GL_UNIFORM_BUFFER, mvpUBO);

glBufferData(GL_UNIFORM_BUFFER, 2 * sizeof(GLfloat) * 16, mvps, GL_STREAM_DRAW);

glBindBufferRange(GL_UNIFORM_BUFFER, mvpBinding, mvpUBO, 0, 2 * sizeof(GLfloat) * 16);

// at this point we have both viewports calculated by the SDK, fetch them

ovrRecti viewPortL = g_oculusVR.GetEyeViewport(0);

ovrRecti viewPortR = g_oculusVR.GetEyeViewport(1);

// create viewport array for geometry shader

GLfloat viewports[] = { (GLfloat)viewPortL.Pos.x, (GLfloat)viewPortL.Pos.y,

(GLfloat)viewPortL.Size.w, (GLfloat)viewPortL.Size.h,

(GLfloat)viewPortR.Pos.x, (GLfloat)viewPortR.Pos.y,

(GLfloat)viewPortR.Size.w, (GLfloat)viewPortR.Size.h };

glViewportArrayv(0, 2, viewports);

// setup the scene and perform instanced render - half the drawcalls!

(...)

glDrawArraysInstanced(GL_TRIANGLE_STRIP, 0, num_verts, 2);

There’s a bit more going on now, so let’s go through the pseudocode step by step:

// MVP matrices for left and right eye

GLfloat mvps[32];

// fetch location of MVP UBO in shader

GLuint mvpBinding = 0;

GLint blockIdx = glGetUniformBlockIndex(shader_id, "EyeMVPs");

glUniformBlockBinding(shader_id, blockIdx, mvpBinding);

// fetch MVP matrices for both eyes

for (int i = 0; i < 2; i++)

{

OVR::Matrix4f MVPMatrix = g_oculusVR.OnEyeRender(i);

memcpy(&mvps[i * 16], &MVPMatrix.Transposed().M[0][0], sizeof(GLfloat) * 16);

}

Starting each frame, we recalculate MVP matrix for each eye just as before. This time, however, it is the only thing we do in a loop. The results are stored in a GLfloat array, since this will be the shader input when drawing both eyes (4×4 matrix is 16 floats, so we need 32 element array to store both eyes). The matrices will be stored in a uniform buffer object, so we need fetch the location of the uniform block before we can perform the update.

// update MVP UBO with new eye matrices

glBindBuffer(GL_UNIFORM_BUFFER, mvpUBO);

glBufferData(GL_UNIFORM_BUFFER, 2 * sizeof(GLfloat) * 16, mvps, GL_STREAM_DRAW);

glBindBufferRange(GL_UNIFORM_BUFFER, mvpBinding, mvpUBO, 0, 2 * sizeof(GLfloat) * 16);

// at this point we have both viewports calculated by the SDK, fetch them

ovrRecti viewPortL = g_oculusVR.GetEyeViewport(0);

ovrRecti viewPortR = g_oculusVR.GetEyeViewport(1);

// create viewport array for geometry shader

GLfloat viewports[] = { (GLfloat)viewPortL.Pos.x, (GLfloat)viewPortL.Pos.y,

(GLfloat)viewPortL.Size.w, (GLfloat)viewPortL.Size.h,

(GLfloat)viewPortR.Pos.x, (GLfloat)viewPortR.Pos.y,

(GLfloat)viewPortR.Size.w, (GLfloat)viewPortR.Size.h };

glViewportArrayv(0, 2, viewports);

// setup the scene and perform instanced render - half the drawcalls!

(...)

glDrawArraysInstanced(GL_TRIANGLE_STRIP, 0, num_verts, 2);

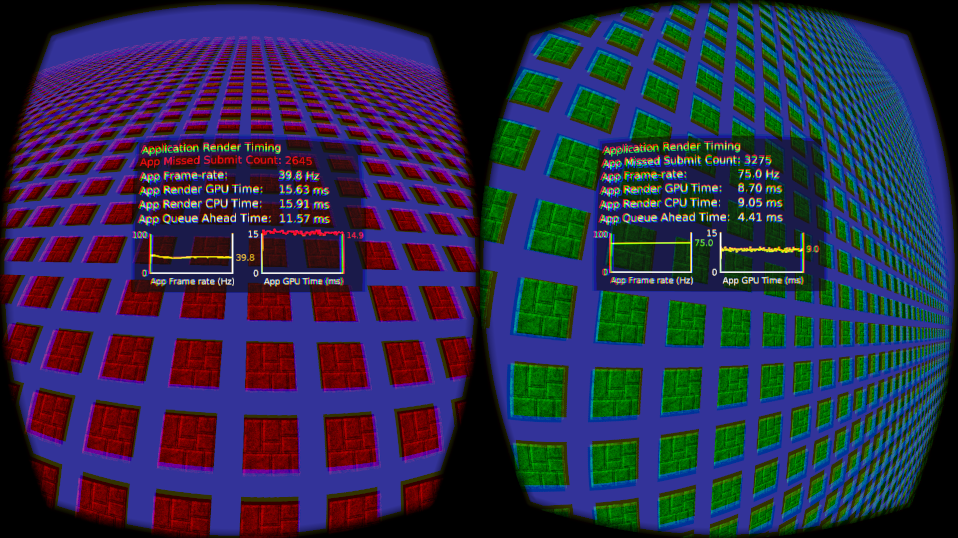

First, we update the UBO storing both MVPs with new calculated values, after which we get to rendering part. Contrary to DirectX, there’s no trivial way to draw to multiple viewports using single draw call in OpenGL, so we’re taking advantage of a (relatively) new feature: viewport arrays. This, combined with the gl_ViewportIndex attribute in a geometry shader will allow us to tell glDrawArraysInstanced() which rendered instance goes into which eye. Final result and performance graphs can be seen on the following screenshot:

Test application rendering 2500 unculled, textured quads. Left: rendering scene twice, once per viewport. Right: using instancing.

Full source code of the test application can be downloaded from GitHub.